In 2021, Gray DI’s list of potential new and emerging academic programs included Truth and Disinformation Intelligence, which we also discussed in a 2023 update. In 2024, the truth is out there, but it’s getting even harder to tell fact from fiction. Is Higher Education keeping up?

Training the Truth Troops: Digital Media Literacy Requirements on the Rise

Earlier this year, Inside Higher Ed reported that a growing number of institutions now include courses in digital/media literacy as a requirement for graduation. Florida State University implemented this requirement in fall 2023, and this fall, the North Dakota University System will launch a similar structure. These emerging requirements are heavily influenced by the explosion of AI technology, which now affects most aspects of daily life. Additionally, dis/misinformation is rampant, affecting media, social media, cybersecurity, politics, news, and more. In July 2024, Techopedia published Fiction Becomes Fact As Fake News Keeps Spreading, which states the following:

“In the U.S. fake local news sites now outnumber legitimate newspapers. Taken to new heights by the use of artificial intelligence, disinformation is not slowing down but mass-scaling and becoming more effective. The most concerning of all is the normalization of fake news, as it becomes harder every day to tell facts apart from lies.”

While AI innovation offers many positive contributions to our lives, it’s still making some big mistakes on the fact-checking front:

“After the fact-checking organization PolitiFact tested ChatGPT on 40 claims that had already been meticulously researched by human fact checkers, the A.I. either made a mistake, refused to answer or arrived at a different conclusion from the fact checkers half of the time.”

AI and Generative AI present major challenges in terms of manipulated media and deepfakes, and potentially even threats to national security. Earlier this year, DARPA announced that its Semantic Forensics (SemaFor) Program, which “seeks to create a system for automatic detection, attribution and characterization of falsified media assets” is ready for commercialization and transition. DARPA reports that it has launched two efforts to support implementation of of its new tech:

- “The first comprises an analytic catalog containing open-source resources developed under SemaFor for use by researchers and industry.”

- “The second will be an open community research effort called AI Forensics Open Research Challenge Evaluation (AI FORCE), which aims to develop innovative and robust machine learning, or deep learning, models that can accurately detect synthetic AI-generated images.”

Good News: College Programs Are Growing

Institutions have begun homing in on degree and certificate programs focused on digital media literacy/disinformation studies as the need for more skilled professionals with expertise in these areas continues to grow.

Since the approach to combating disinformation is necessarily diverse depending upon the sector to which it applies, many schools have begun incorporating courses, concentrations, or degrees in digital media literacy related to a broad range of fields: public policy, computer science, cybersecurity, sociology, ethics, political science, national security, healthcare, communications, and more.

There’s been very significant progress. Arizona State University now offers a BA in Digital Media Literacy; previously, only a minor in the subject was offered. The University of Florida College of Education offers a specialization in media literacy within its MAE and Ed.S degree programs. Appalachian State University, Boise State University and St. Thomas University all offer certificate programs. Southern Illinois University Edwardsville offers a post-baccalaureate certificate in digital media literacy; one required course is called “Propaganda in the Digital Age.” At UCLA, a minor in Information and Media Studies has required courses called “Information and Power” and “Internet and Society.” “Disinformation and Narrative Warfare” is offered at NYU, associated with degrees in Global Studies and Global Security, Conflict, and Cybercrime. At The New School: “Digital Disinformation: Truth, Lies, and the Rise of AI”.

One particularly interesting degree offering stands out in its specificity: American University’s MA in Media, Technology and Democracy. The program description is laser-focused:

Become a Media and Technology Policy Expert

Internet surveillance, disinformation campaigns, and manipulation of digital media are some of the greatest threats to democracy today. To counter those threats, and to support free and equitable societies, organizations need strategic thinkers with expertise in communications, technology, and policy.”

Other related degree offerings are also on the rise. Recent program announcements include Champlain College’s Digital Transformation Leadership (Master’s, MBA, and Graduate Certificate); Augusta University’s PhD in Intelligence, Defense, and Cybersecurity Policy; University of Arkansas’ Undergraduate Microcertificate in Strategic Media Skills; University of Virginia’s College at Wise Master of Technology and Data Analytics. This is just a partial list, and we expect it to grow.

Jobs for Media Literacy/Disinformation Graduates

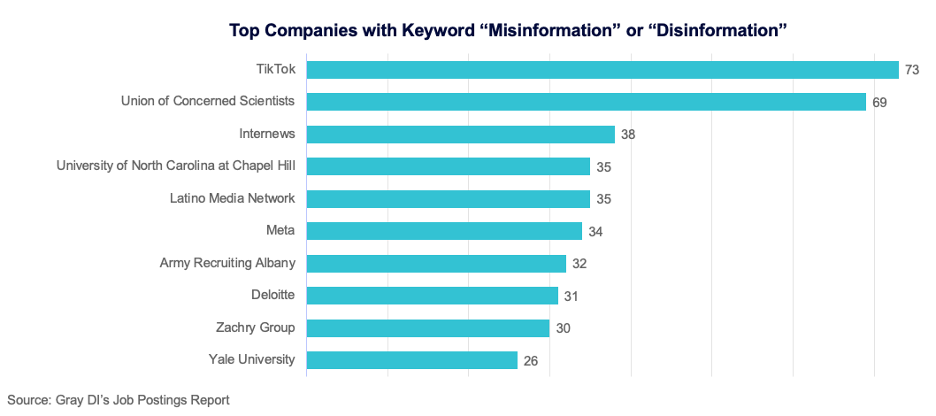

So who’s looking to hire people with a background in digital media literacy/disinformation? Quite a variety of employers, it turns out.

Upon further examination of detailed job descriptions using Gray’s Job Postings Dashboard, a fascinating array of positions reveal the breadth and depth of potential career opportunities for job seekers with a background in disinformation studies.

Examples of Detailed Job Posting Descriptions by Keywords: Misinformation and Disinformation

TikTok:

- “Product Manager, Integrity and Authenticity – Trust and Safety”

- “Communications Manager – Civility and Integrity”

- “Product Policy Manager, Health and Science Misinformation – Trust & Safety”

Union of Concerned Scientists:

- “Western States Analyst”

- “Science Network Mobilization Manager”

- “Science Fellowship: Climate Attribution Science”

- “Innovation Fellowship: AI and Governance”

Brennan Center for Justice:

- “Counsel (Entry-Level), Voting Rights and Elections Program”

Other terms that appear frequently within job description details: OSINT, digital democracy, state-backed propaganda, information manipulation, disinformation action lab, digital justice, cybersecurity incident response plans, election manipulation or interference integrity, authenticity, foreign interference, global media specialist, content reviewer.

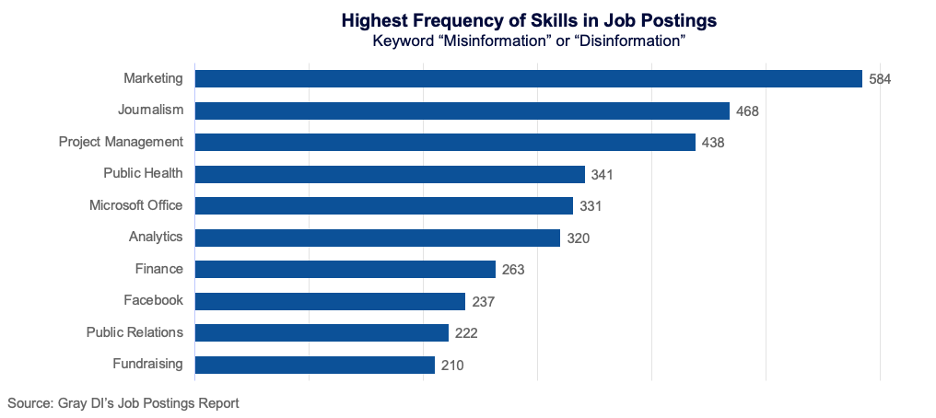

Disinformation Studies and Skill Trends

Unsurprisingly, the skills employers seek in candidates support the job descriptions mentioned above.

Responsible Journalism in the Age of Generative AI

Recently, there’s been increased focus on forming a plan for best practices regarding the use of generative AI in the newsroom (GAIN). Earlier this year, Northwestern University announced it had received a grant from the Knight Foundation through Press Forward “to help journalists and news organizations understand potential use and misuse of generative artificial intelligence.” Just last month, Northwestern posted a position for a full-time reporter to cover GAIN.

We CAN Handle the Truth

The fight against disinformation is ongoing and is unlikely to get any less complicated as technology grows. Building and strengthening an army of truth seekers from a diverse array of disciplines is the only path to victory in the war on lies. Our take? Don’t stop believing…in real news.

Join us for our 6th annual, “5 Emerging Academic Programs to Watch,” webinar and secure your institution’s place at the edge of educational transformation.